- How to install pyspark on eclipse windows how to#

- How to install pyspark on eclipse windows download#

- How to install pyspark on eclipse windows windows#

How to install pyspark on eclipse windows windows#

Install Microsoft Visual C++ 2010 Redistributed Package (圆4) Two approaches to setup Pyspark in an IDE are : Using Pip i.e pip install pyspark, As mentioned in the following link ( E0401:Unable to import pyspark in VSCode in Windows 10) By appending PySpark modules in 'PYTHONPATH' path, as mentioned in following articles.Set Paths for %HADOOP_HOME%\bin and %SPARK_HOME%\bin.Set the env for HADOOP_HOME to C:\hadoop and SPARK_HOME to C:\spark.

How to install pyspark on eclipse windows download#

How to install pyspark on eclipse windows how to#

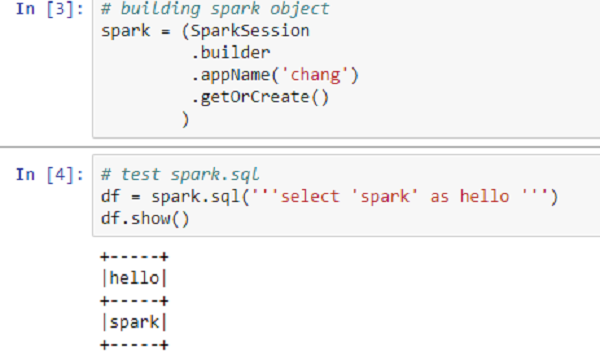

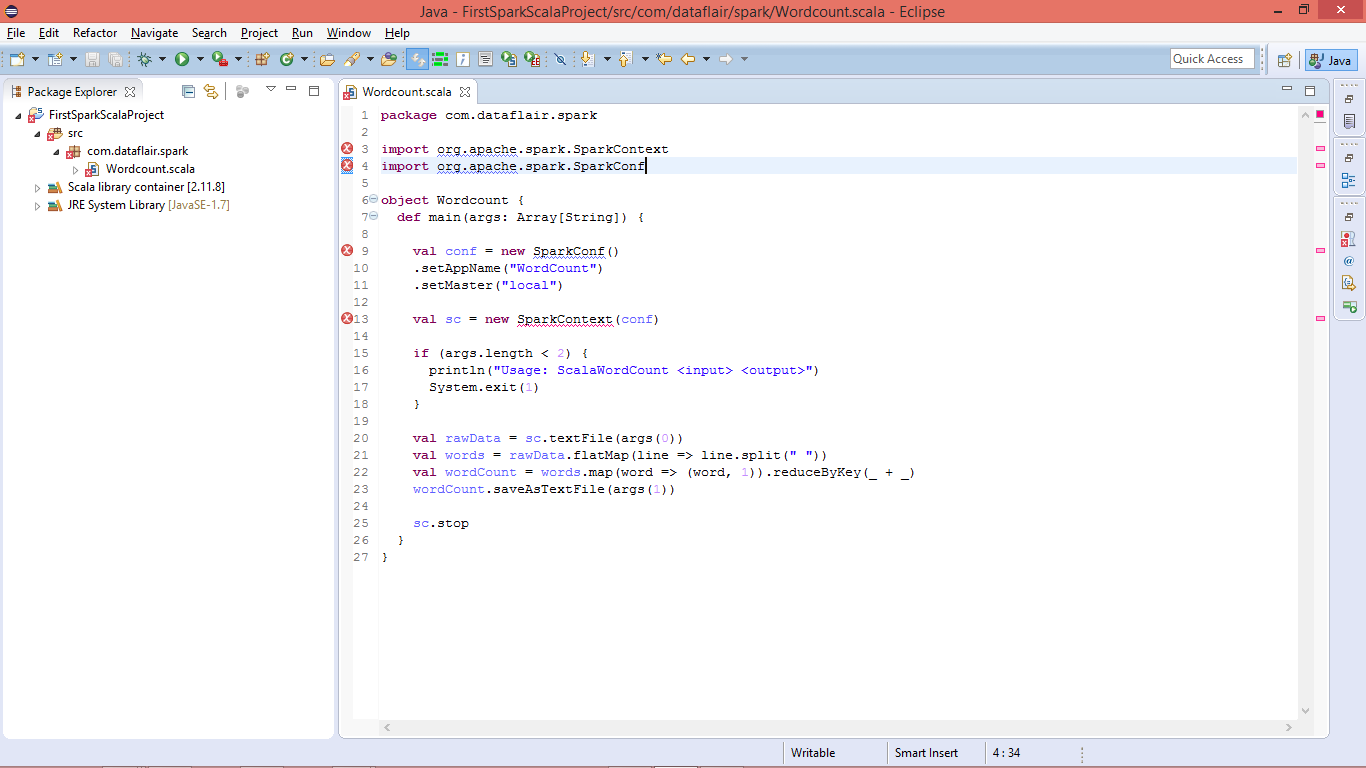

GraalVM and Spring Applications Parsing secrets from AWS secrets manager using AWS cli How to CrossCompile Go programs on Windows 10 redis: max number of clients reached Pyspark : select specific column with its position Android Studio. Now add External Jars from the location D: \ spark \ spark-2.0.1-bin-hadoop2.7 \ lib see below. Open Eclipse and do File > New Project > Select Maven Project see below. I will be installing PySpark in an environment named PythonFinance. During installation after changing the path, select setting Path Install Eclipse should restart after completing the installation process. Now you have completed the installation step, we’ll create our first Spark project in Java. Open Anaconda Prompt and activate the environment where you want to install PySpark.Make sure you install it in the root C:\java Windows doesn't like space in the path.

0 kommentar(er)

0 kommentar(er)